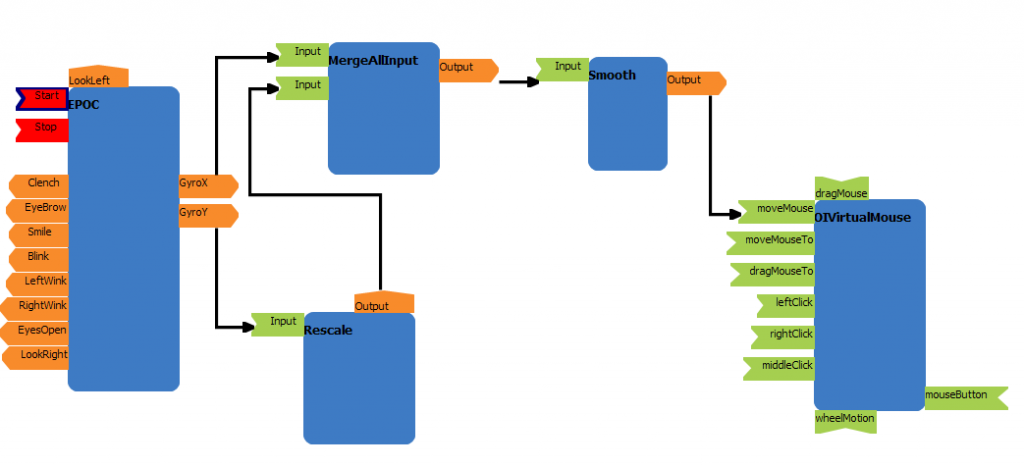

Here is the OpenInterface “code” used for the demonstration (click to zoom) :

Tag Archives: OpenInterface

Controlling the computer mouse using the Emotiv Epoc

Using the Emotiv Epoc with the Emotiv API

After using OpenVibe to get the signals, we spent time on the Emotiv API.

It proposes directly different functions for three types of behavior:

- The emotions;

- The cognition;

- The expressions.

This last one, is the one wich allows to get the most results in a few time. So, we decided to use it (we don’t have time to explore all the possibility the Epoc offers).

It is also easy to use and, in a short time, we managed to implement it in OpenInterface.

Here is a list of the different expressions that we can recognize (this works for most tests) :

- The blink of eyes

- The eyebrow movement

- The left wink

- The right Wink

- The look on the right

- The look on the left

- Clench teeth

- The smile

Get the OpenVibe signals (using VRPN server) into OpenInterface

First read the “Skemmi-QuickStart.pdf” (how to install OpenInterface and first steps into skemmi environment) and “OpenInterfaceDeveloperComponentGuide.pdf” (how to implement a new component).

Now, here is a step-by-step on how implement OpenVibe VRPN server in OpenInterface.

OpenInterface

The second step of the IP will be to use the data (received from the sensors) into an application (like a multimedia application).

There is most chance that the SDK used to get the data from the sensors and the SDK used to develop the application (or any elements in the chain) doesn’t use the same langage !

For example, we may need to use a c++ SDK to get the data from a sensor, do a signal processing with a java library and use the resulting data in a musical software who uses OSC messages.

All the members of the team will be in charge of one of these processes and it could be difficult to combine all the processes in the end.

It is the reason why we could be able to use OpenInterface.

What is OpenInterface ?